Fondazione Bruno Kessler - Technologies of Vision

contains material from

Template Matching Techniques in Computer Vision: Theory and Practice

Roberto Brunelli © 2009 John Wiley & Sons, Ltd

One of the drawbacks of template matching is its high computational cost which is related to the resolution of the images being compared. It is then of interest finding a lower dimensionality representation that:

The most widely used transform satisfying the above constraints is principal component analysis (PCA): a translation and rotation of the original coordinate system, providing a set of directions sorted by decreasing contribution to pattern representation. It is a pattern specific representation that, in many cases of practical interest, allows us to use a limited subset of directions to approximate well the pattern. The better the require approximation, the higher the number of directions required.

We now read a set of 800, geometrically normalized, grey images representing faces from multiple races. We then create two different vectorized sets, extracting the central face region (raceSample1) and an extended set with additional 8 congruent images at 1 pixel distance (raceSample9).

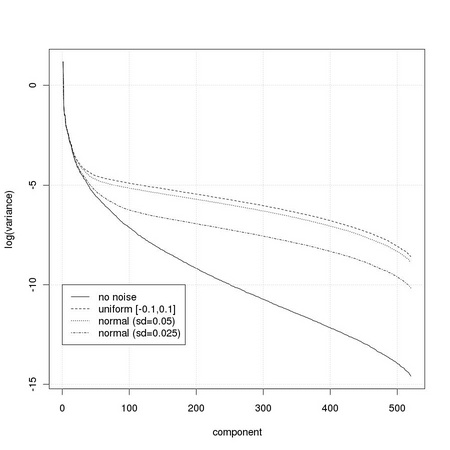

We can now compute the covariance matrices and, using the singular value decomposition, determine its eigenvalues representing the variance described by each direction

generating a comparative plot

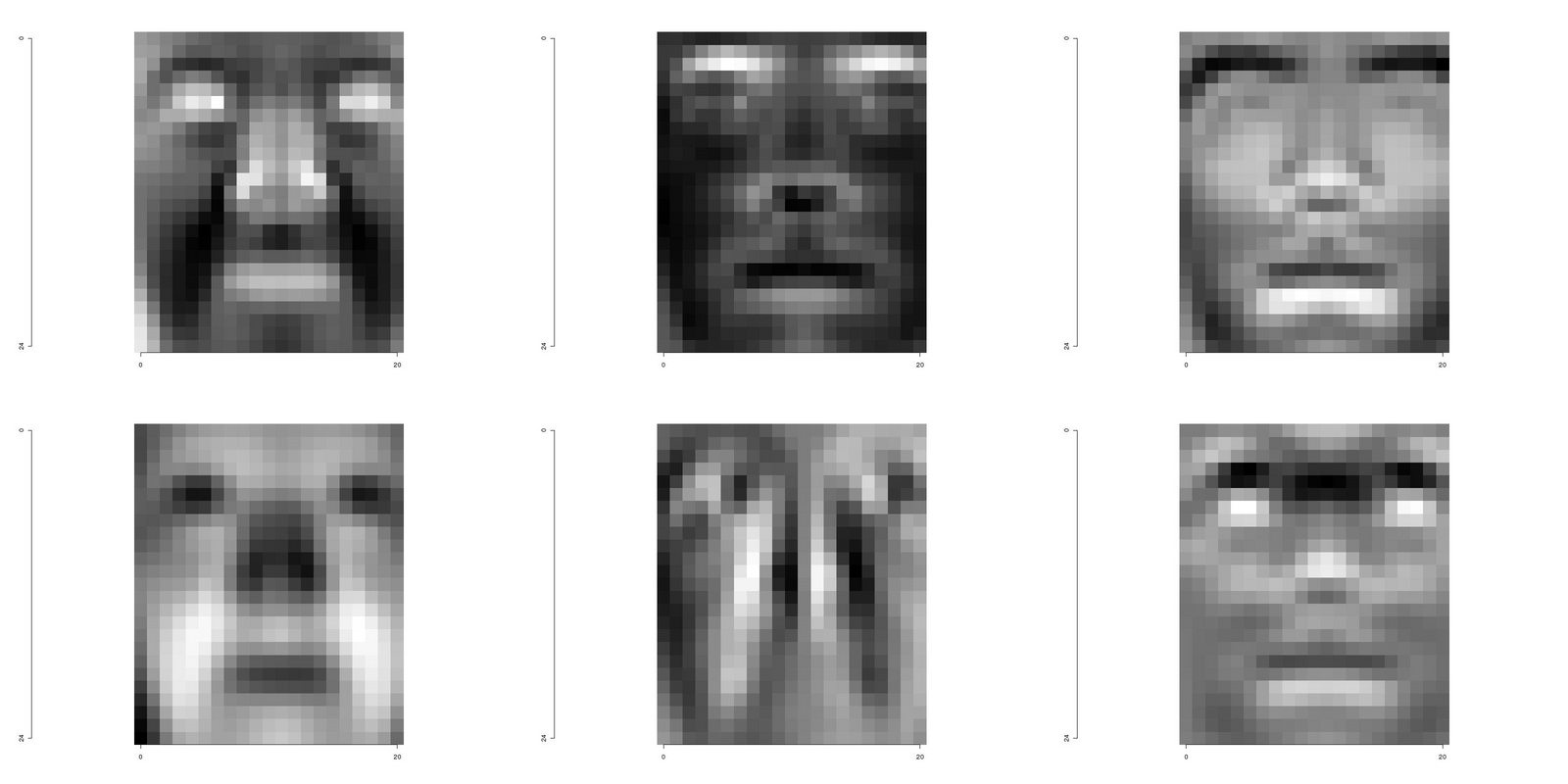

The eigenvectors, or eigenfaces in this case, can be obtained directly from the singular value decomposition and de-linearized restoring the spatial layout of the images they derive from

and the first three eigenfaces from raceSamples1 and raceSamples9 are shound in Figure 8.2.

|

The required number of principal components depends on the specific task considered, and on the required performance. In some cases, the appropriate number can be determined by inspection of the values of the variance captured by the different directions. One such a case is that of images corrupted by noise. Usually, in the case of patterns not corrupted by noise, the variance associated to the different directions decreases quickly. When noise is present, i.e. white noise, its contribution may dominate the lowest variance directions. This is particularly evident for white noise, characterized by a constant variance for all directions, due to its spherical distribution. We are then interested in determining the cross point at which the contribution of the signal starts to be dominated by the noise.

We generate a set of pure noise images using two different types of noise: uniform and normal.

We can now perform PCA separately on the set of images corrupted by uniform noise

and on the set of images corrupted by normal noise with a standard deviation of 0.05

and with a standard deviation of 0.025

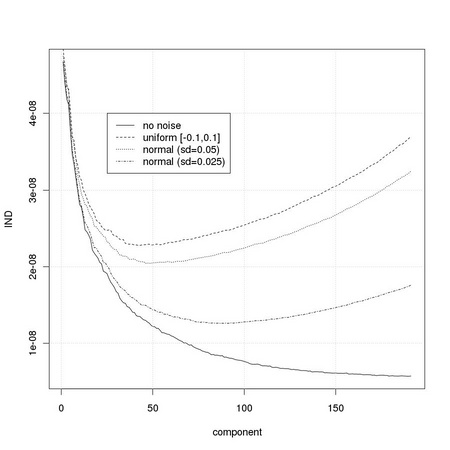

Let us define a simple indicator function following the description of Section TM8.1.2

and apply it to the eigenvalues sets just computed:

|